Specific Challenge Tasks

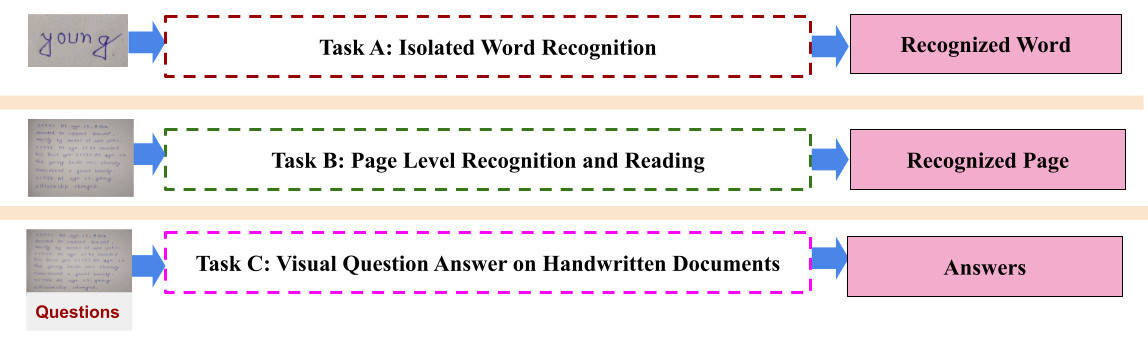

For the challenge, we focus on the following languages: English, Hindi, Bangla, and Telugu. We propose following three main tasks (see Fig. 1) on handwritten documents.

Task A: Isolated Word Recognition - Task A, a consistent feature from our prior competitions, including ICFHR 2022 IHTR and ICDAR 2023 IHTR, focuses on designing robust algorithms for isolated word recognition. The primary objective is to create algorithms that accurately recognize each word within a provided set of word images.

Task B: Page Level Recognition and Reading - Task B is an innovative addition to this year’s competition, offering participants a fresh challenge in handwritten text recognition. The primary objective is to develop a robust algorithm capable of recognizing complete pages and preserving the reading order. Participants have the flexibility to approach the task with word or line-level segmentation, employing techniques that enhance recognition through finer granularity. The competition welcomes submissions for those opting not to use segmentation (word or line-level). If the performance without segmentation is comparable to the best scores achieved with segmentation, such submissions will be recognized as exceptional.

Task C: Visual Question Answer on Handwritten Documents - Beyond mere recognition, the contemporary focus in document analysis extends to extracting meaningful information tailored to users’ needs. Drawing inspiration from pioneering works such as DocVQA [15] and related tasks [16,17,18] for printed documents, this challenge introduces a VQA task specifically dedicated to handwritten documents. Based on the practices in the past work (such as DocVQA [15]), we use Average Normalized Levenshtein Similarity (ANLS) and Accuracy (Acc.) as the evaluation metric. These metrics provide a robust and comprehensive assessment of the performance of VQA algorithms on handwritten documents.

Evaluation Metrics

Task A: Isolated Word Recognition:

We use two famous evaluation metrics, Character Recognition Rate (\(CRR\)) (alternatively Character Error Rate, \(CER\)) and Word Recognition Rate (\(WRR\)) (alternatively Word Error Rate, \(WER\)), to evaluate the performance of the submitted word level recognizers. Error Rate (\(ER\)) is defined as

$$ { ER= \frac{S+D+I}{N} (1) } $$

Where \(S\) indicates number of substitutions, \(D\) indicates number of deletions, \(I\) indicates number of insertions and \(N\) number of instances in reference text. In case of \(CER\), Eq. (1) operates on character level and in case of \(WER\), Eq. (1) operates on word level. Recognition Rate (\(RR\)) is defined as

$$ { RR = 1-ER (2) } $$

In the case of \(CRR\), Eq. (2) operates on character level, and in the case of \(WRR\), Eq. (2) works on word level.

Task B: Page Level Recognition and Reading:

We average \(CRR\) and \(WRR\), defined in Eq. (2), over all pages in the test set and define new measure average page level \(CRR\) (\(PCRR\)) and average page level \(WRR\) (\(PWRR\)).

$$ { PCRR = \frac{1}{L} \sum_{i=1}^{L} CRR(i), \label{eqn_cr} (3) } $$

and

$$ { PWRR = \frac{1}{L} \sum_{i=1}^{L} WRR(i), \label{eqn_wr} (4) } $$

where \(CRR(i)\) and \(WRR(i)\) are \(CRR\) and \(WRR\) of \(i^{th}\) page in the test set.

Task C: Visual Question Answers on Handwritten Documents:

Similar to the past works [15,16], we use Average Normalized Levenshtein Similarity (\(ANLS\)) and Accuracy (\(Acc.\)) as the evaluation metric for this task. \(ANLS\) is given by Eq. (5), where \( N\) is the total number of questions, \(M\) are possible ground truth answers per question, \(i\ =\ {0...N}\), \(j\ =\ {0...M}\) and \(o_{q_i}\) is the answer to the \(i^{th}\) question \( q_i\).

$$ { \begin{split}

ANLS & =\frac{1}{N}\sum_{i=0}^{N}\Big( \max_j\ s(a_{ij}, o_{q_i}) \Big) \\

s(a_{ij}, o_{q_i}) & = \begin{cases}

1 - NL(a_{ij}, o_{q_i}), & \text{if $NL(a_{ij}, o_{q_i})<\tau$}.\\

0, & \text{otherwise}.

\end{cases}

\end{split} (5)} $$

where \( NL(a_{ij}\) , \(o_{q_i} )\) is the normalized Levenshtein distance (ranges between 0 and 1) between the strings \( a_{ij}\) and \( o_{q_i}\). The value of \(\tau\) can be set to add softness toward recognition errors. If the normalized edit distance exceeds \(\tau\), it is assumed that the error is because of an incorrectly located answer rather than an OCR mistake.