Dataset

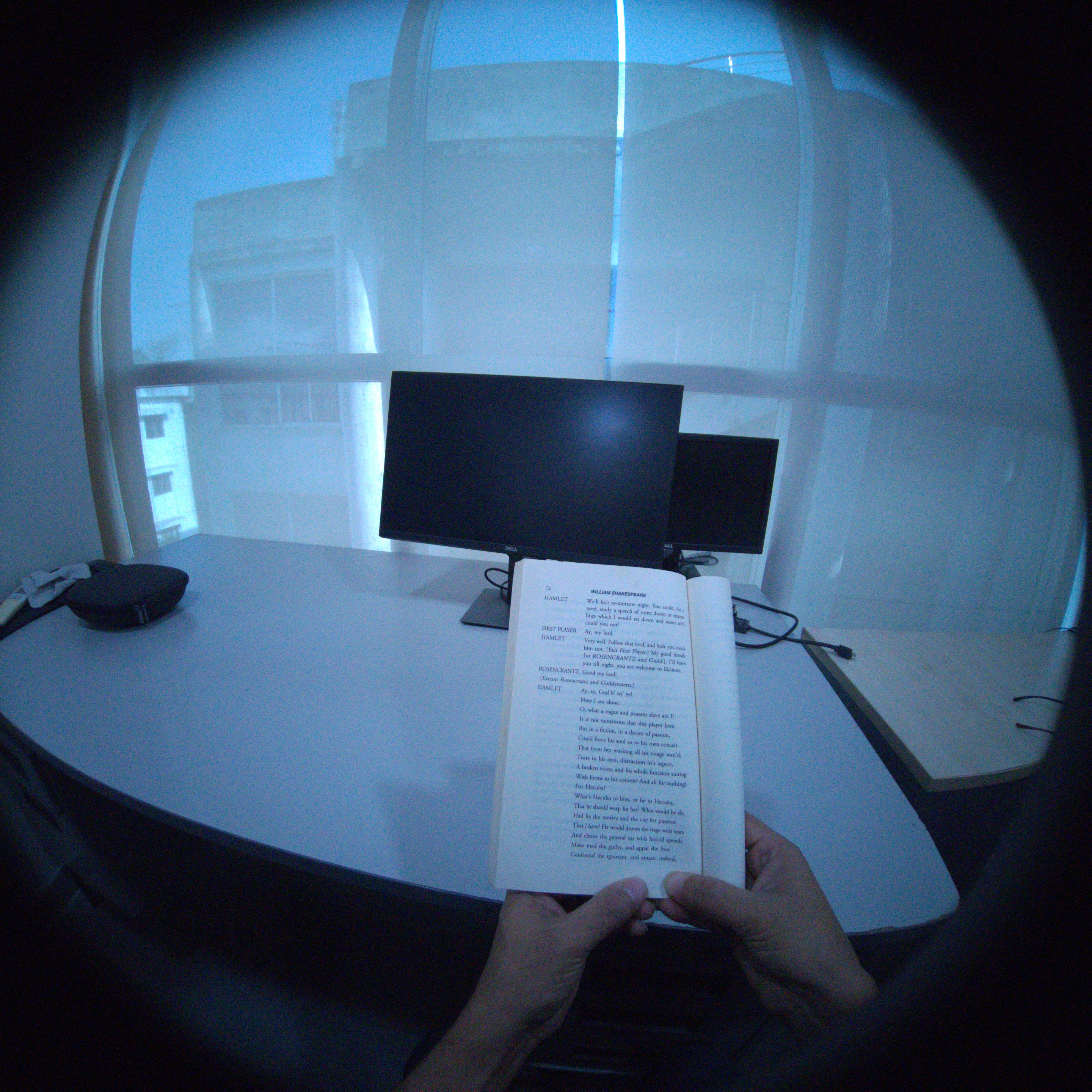

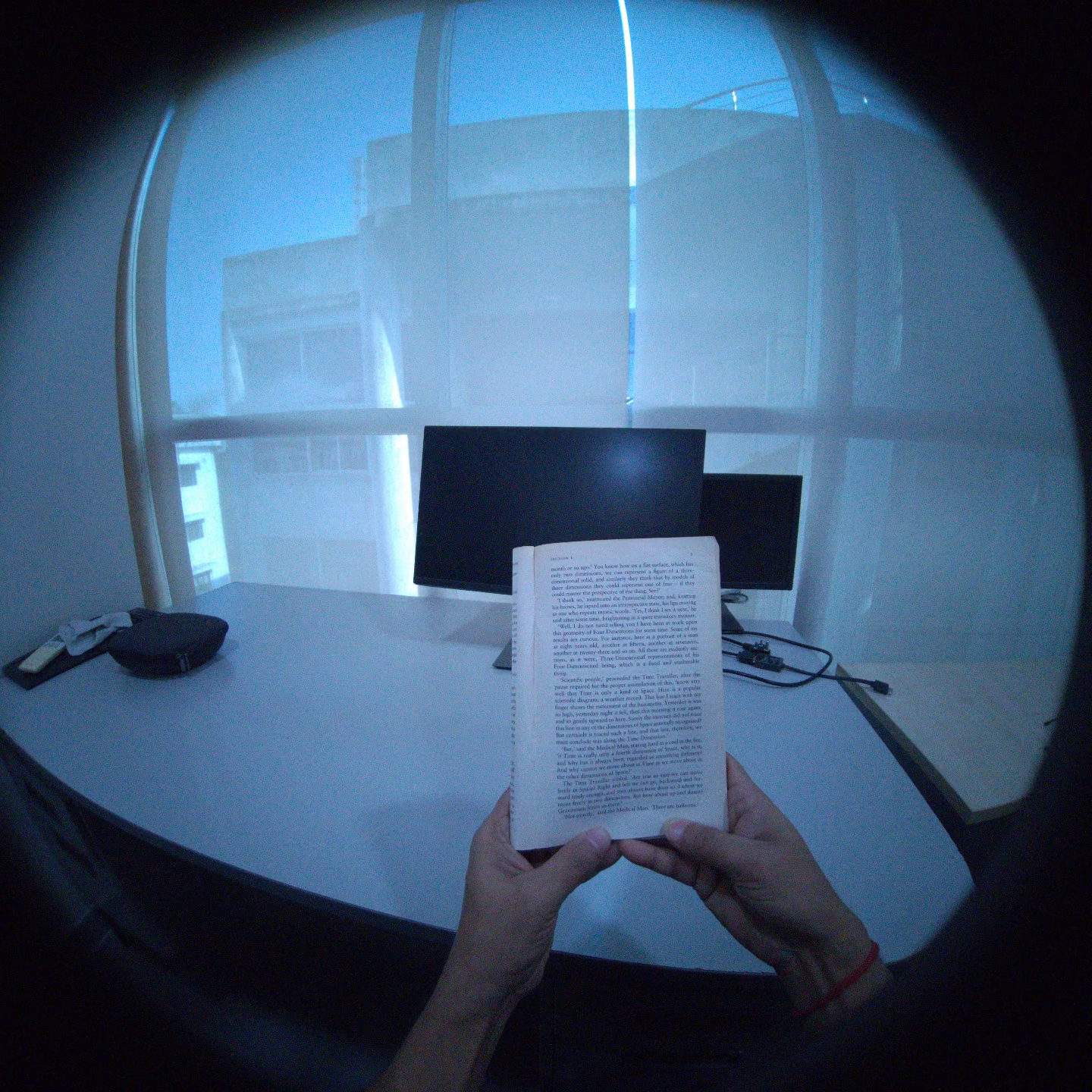

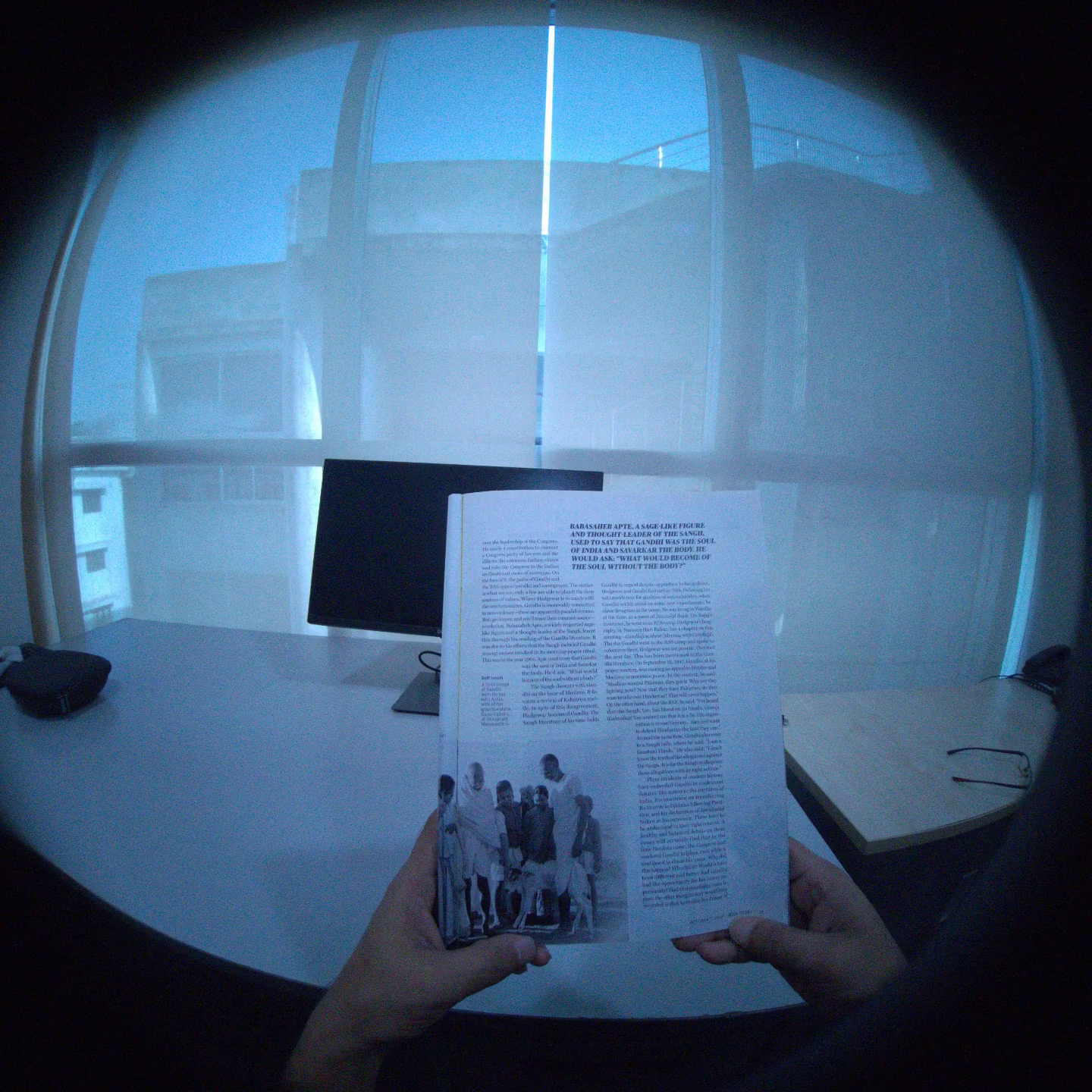

We have curated a diverse dataset of raw document images captured by Aria Glasses, encompassing various English content genres. The dataset comprises documents such as books, newspapers, and magazines. The document images are captured while reading under a spectrum of indoor and outdoor situations, covering different times of day and night. The diverse lighting conditions include daytime and nighttime. This comprehensive approach ensures the inclusion of a wide range of scenarios that may be encountered in real-world reading situations. All images in the dataset undergo meticulous manual annotation to provide detailed information for analysis. The annotations include (i) bounding boxes: precise annotation of word-level bounding boxes on each page, (ii) reading order: Annotation of the reading order, capturing the natural flow of content on the page, and (iii) text transcription: comprehensive text transcription of words present on each page, providing ground truth for content recognition. Few sample images are presented in Fig.1.

Fig.1 shows sample images captured by Aria Glasses.

We have compiled a dataset named RDTAG-1.0, specifically designed to recognize isolated text and predict reading order for word level images extracted from documents. The dataset is divided into two subsets: the training and test sets.

Training set can be downloaded from this link RDTAG-1.0 . The training set comprises document images captured by Aria Glasses in '.jpg' format and corresponding ground truths available in '.json' format (e.g., '1.jpg' and '1.json'). The 'JSON' file for ground truth encompasses bounding boxes, textual transcriptions, and the reading order of all words within a document image. The annotation process adheres to the natural reading order of a document, progressing from left to right and top to bottom in the case of single-column documents. For multi-column documents, we maintain the same reading order for each column. Furthermore, we offer the textual transcription of the complete page in a '.txt' format (e.g., '1.txt'), maintaining the same reading order. This transcription is essential for the page level recognition task. Within the text file, '\n' denotes the end of one line and the start of the next, while '\n\n' signifies the end of one paragraph and the beginning of the next. Notably, texts that are part of natural images, graphs, or plots are excluded from the ground truth annotation.

Test Set for Task A: Isolated Word Recognition in Low Resolution

Test set can be downloaded from this link Test Set (Task A) . The test set contain an image folder containing word images (in ‘.jpg’ format), and ‘test.txt’ includes the path of test word images.

The output should be saved as ‘result.txt’ which contains names of test word images and corresponding predictions separated by a tab in each line.

Test Set for Task B: Prediction of Reading Order

Test set can be downloaded from this link Test Set (Task B) . The test set consists of a folder containing text files that contain bounding boxes of words and corresponding textual transcription of words of a page image (in ‘.txt’ format, e.g., "1_1.txt").

The output should be saved as ‘*.txt’ (e.g., if name of the test set is "1_1.txt", the corresponding output should be saved as "1_1_result.txt") which contains bounding boxes of words, textual transcriptions (as present in the test set) and predicted sequence order separated by a tab in each line.

Test Set for Task C: Page Level Recognition and Reading

Test set can be downloaded from this link Test Set (Task C) . The test set contain page level images (in ‘.jpg’ format).

There should be one output file corresponding to an image. The output should be saved as ‘image name_result.txt’ (e.g., for image "304.jpg", the predited result should be saved as "304_result.txt"), which contains the predicted text of the complete page image.

Participants are permitted to utilize supplementary publicly accessible datasets for pre-training purposes. Nonetheless, they are required to specify which datasets were used for pre-training.

The dataset is freely available for academic and research purposes.